Note

Click here to download the full example code

Independent and identically distributed returns¶

A very common assumption in modelling of financial returns is independence in time. In other words, there is no use in considering today (or any other past day) returns to predict tomorrow returns. Another very popular assumption is that returns have the same distribution. Combining both of these assumptions we basically claim that there is some underlying multivariate distribution and the actual returns we observe are just independent samples from it.

deepdow can be applied in a market with the above described IID dynamics. This example will

analyze how good deepdow is at learning optimal portfolios given these dynamics.

Note

deepdow contains many tools that help extract hidden features from the original ones. However,

under the IID setup we are in a position where the lagged returns have no predictive value. We

have zero features which is very unusual from the point of view of standard machine learning

applications. However, nothing prevents us from learning a set of constants that uniquely determine

the final allocation. As in the more standard case with features, these parameters

can be learned via minimization of an empirical loss like mean returns, sharpe ratio, etc.

This example is divided into the following sections

Creation of synthetic return series

Convex optimization given the ground truth

Sample estimators of covmat and mean

Implementation and setup of a

deepdownetworkTraining and evaluation

Preliminaries¶

Let us start with importing all important dependencies.

import cvxpy as cp

from deepdow.benchmarks import Benchmark

from deepdow.callbacks import EarlyStoppingCallback

from deepdow.data import InRAMDataset, RigidDataLoader

from deepdow.layers import NumericalMarkowitz

from deepdow.losses import MeanReturns, StandardDeviation

from deepdow.experiments import Run

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

import torch

Let us also set seeds for both numpy and torch.

torch.manual_seed(4)

np.random.seed(21)

Creation of synthetic return series¶

To ensure that we generate realistic samples we are going to use S&P500 stocks. Namely, we precomputed daily mean returns and covariance matrix for a subset of all the stocks and one can just simply load them.

mean_all = pd.read_csv('sp500_mean.csv', index_col=0, header=None).iloc[:, 0]

covmat_all = pd.read_csv('sp500_covmat.csv', index_col=0)

Let us now randomly select some assets

n_assets = 15

asset_ixs = np.random.choice(len(mean_all), replace=False, size=n_assets)

mean = mean_all.iloc[asset_ixs]

covmat = covmat_all.iloc[asset_ixs, asset_ixs]

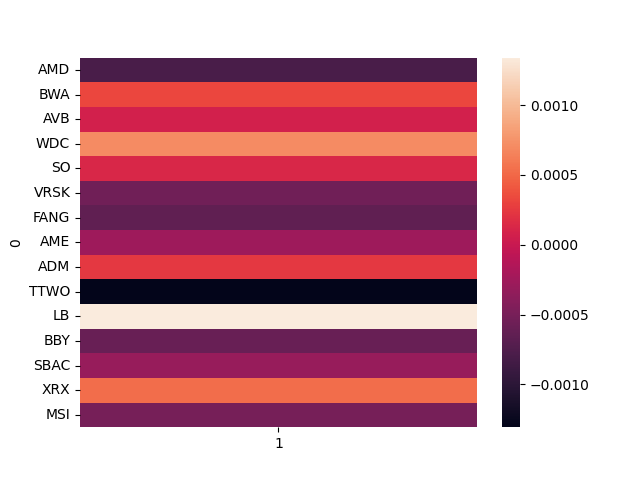

These are the expected returns

sns.heatmap(mean.to_frame())

<AxesSubplot:ylabel='0'>

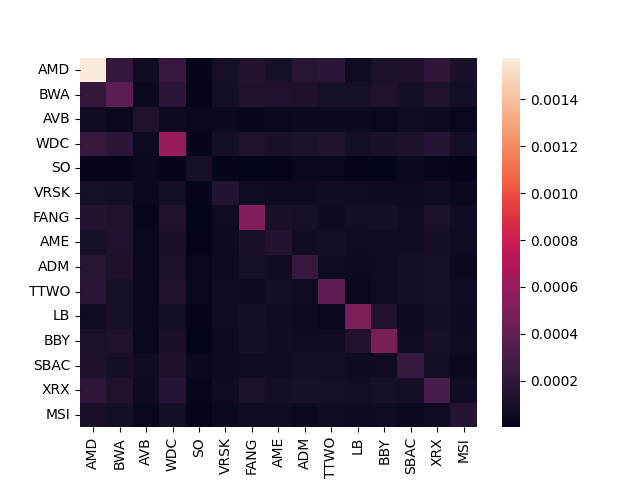

And this is the covariance matrix

sns.heatmap(covmat)

<AxesSubplot:>

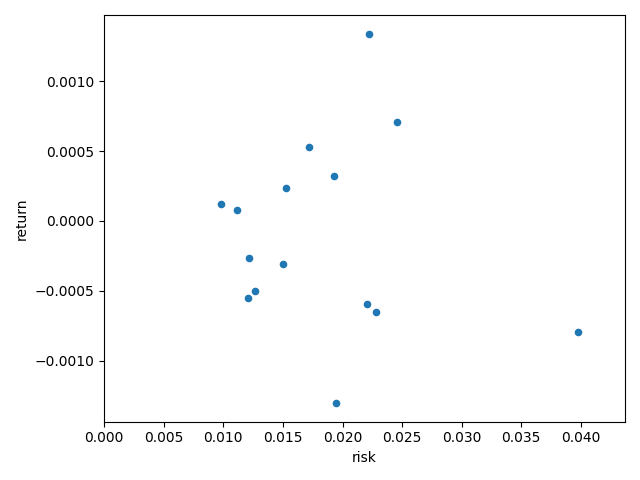

Additionally let us inspect them in the risk and return plane

df_risk_ret = pd.DataFrame({'risk': np.diag(covmat) ** (1 / 2),

'return': mean.values})

x_lim = (0, df_risk_ret['risk'].max() * 1.1)

y_lim = (df_risk_ret['return'].min() * 1.1, df_risk_ret['return'].max() * 1.1)

ax = df_risk_ret.plot.scatter(x='risk', y='return')

ax.set_xlim(x_lim)

ax.set_ylim(y_lim)

plt.tight_layout()

From now on we see the mean and covmat as the ground truth parameters that fully determine the market dynamics. We now generate some samples from a multivariate normal distribution with mean and covmat as the defining parameters.

n_timesteps = 1500

returns = pd.DataFrame(np.random.multivariate_normal(mean.values,

covmat.values,

size=n_timesteps,

check_valid='raise'), columns=mean.index)

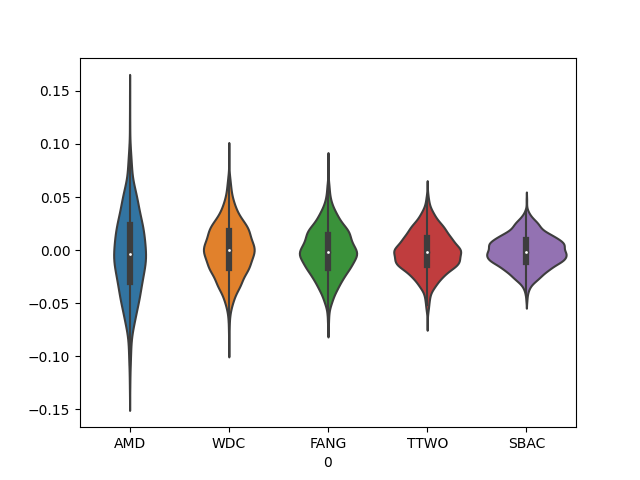

sns.violinplot(data=returns.iloc[:, ::3])

<AxesSubplot:xlabel='0'>

Convex optimization given the ground truth¶

Since we posses the ground truth mean and covmat we can find optimal weight allocation given some objective and constraints. We are going to consider the following objectives

Minimum variance

Maximum return

Maximum utility

Since the combination of our objective and constraints will result in a convex optimization problem

we can be sure that the optimal solution exists and is unique. Additionally, we will use

cvxpy to find the solution numerically. For simplicity, we will refer to all of these

optimization problems as Markowitz optimization problems. See below the implementation.

class Markowitz:

"""Solutions to markowitz optimization problems.

Parameters

----------

mean : np.ndarray

1D array representing the mean of returns. Has shape `(n_assets,)`.

covmat : np.ndarray

2D array representing the covariance matrix of returns. Has shape `(n_assets, n_assets)`.

"""

def __init__(self, mean, covmat):

if mean.ndim != 1 and covmat.ndim != 2:

raise ValueError('mean needs to be 1D and covmat 2D.')

if not (mean.shape[0] == covmat.shape[0] == covmat.shape[1]):

raise ValueError('Mean and covmat need to have the same number of assets.')

self.mean = mean

self.covmat = covmat

self.n_assets = self.mean.shape[0]

def minvar(self, max_weight=1.):

"""Compute minimum variance portfolio."""

w = cp.Variable(self.n_assets)

risk = cp.quad_form(w, self.covmat)

prob = cp.Problem(cp.Minimize(risk),

[cp.sum(w) == 1,

w <= max_weight,

w >= 0])

prob.solve()

return w.value

def maxret(self, max_weight=1.):

"""Compute maximum return portfolio."""

w = cp.Variable(self.n_assets)

ret = self.mean @ w

prob = cp.Problem(cp.Maximize(ret),

[cp.sum(w) == 1,

w <= max_weight,

w >= 0])

prob.solve()

return w.value

def maxutil(self, gamma, max_weight=1.):

"""Maximize utility."""

w = cp.Variable(self.n_assets)

ret = self.mean @ w

risk = cp.quad_form(w, self.covmat)

prob = cp.Problem(cp.Maximize(ret - gamma * risk),

[cp.sum(w) == 1,

w <= max_weight,

w >= 0])

prob.solve()

return w.value

def compute_portfolio_moments(self, weights):

"""Compute mean and standard deviation of some allocation.

Parameters

----------

weights : np.array

1D array representing weights of a portfolio. Has shape `(n_assets,)`.

Returns

-------

mean : float

Mean (return)

std : float

Standard deviation (risk)

"""

pmean = np.inner(weights, self.mean)

pmean = pmean.item()

pvar = weights @ self.covmat @ weights

pvar = pvar.item()

return pmean, pvar ** (1 / 2)

We can now compute all the relevant optimal portfolios. We consider two cases with respect to

the max_weight - constrained (max_weight<1) and unconstrained

(max_weight=1).

markowitz = Markowitz(mean.values, covmat.values)

max_weight = 2 / n_assets

gamma = 10

optimal_portfolios_u = {'minvar': markowitz.minvar(max_weight=1.),

'maxret': markowitz.maxret(max_weight=1.),

'maxutil': markowitz.maxutil(gamma=gamma, max_weight=1.),

}

optimal_portfolios_c = {'minvar': markowitz.minvar(max_weight=max_weight),

'maxret': markowitz.maxret(max_weight=max_weight),

'maxutil': markowitz.maxutil(gamma=gamma, max_weight=max_weight)}

Let’s write some visualization machinery!

color_mapping = {'minvar': 'r',

'maxret': 'g',

'maxutil': 'yellow'}

mpl_config = {'s': 100,

'alpha': 0.65,

'linewidth': 0.5,

'edgecolor': 'black'}

marker_mapping = {'true_c': '*',

'true_u': 'o',

'emp_c': 'v',

'emp_u': 'p',

'deep_c': 'P',

'deep_u': 'X'}

def plot_scatter(title='', **risk_ret_portfolios):

ax = df_risk_ret.plot.scatter(x='risk', y='return')

ax.set_xlim(x_lim)

ax.set_ylim(y_lim)

plt.title(title)

plt.tight_layout()

all_points = []

all_names = []

for name, portfolios in risk_ret_portfolios.items():

for objective, w in portfolios.items():

y, x = markowitz.compute_portfolio_moments(w)

all_points.append(ax.scatter(x,

y,

c=color_mapping[objective],

marker=marker_mapping[name],

**mpl_config))

all_names.append("{}_{}".format(objective, name))

plt.legend(all_points,

all_names,

scatterpoints=1,

loc='lower left',

ncol=len(risk_ret_portfolios),

fontsize=8)

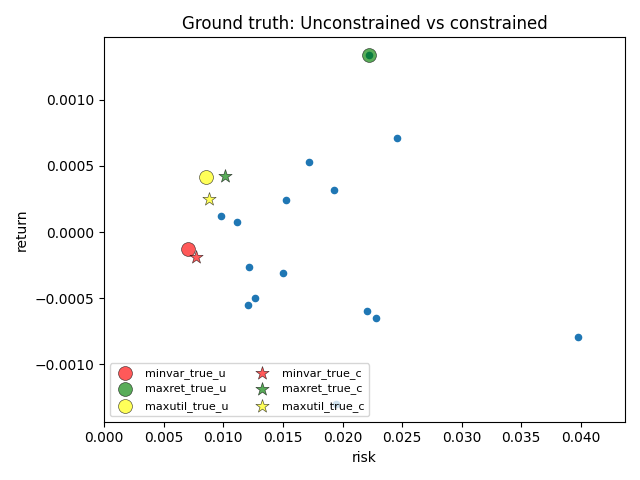

Now we are ready to analyze the results. In the below figure we compare constrained and unconstrained optimization.

plot_scatter(title='Ground truth: Unconstrained vs constrained',

true_u=optimal_portfolios_u,

true_c=optimal_portfolios_c)

Sample estimators of covmat and mean¶

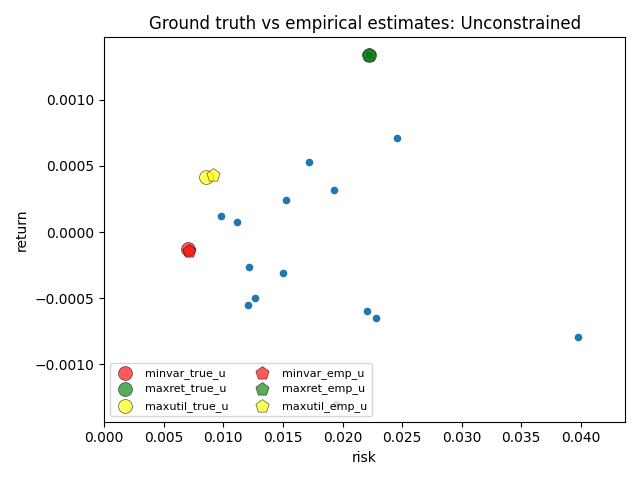

The main question now is whether we are able to find the optimal allocation just from the

returns. One obvious way to do it is to explicitly estimate mean and covariance matrix

and use the same optimizers as before to find the solution. If number of samples is big enough

this should work well!

markowitz_emp = Markowitz(returns.mean().values, returns.cov().values)

emp_portfolios_u = {'minvar': markowitz_emp.minvar(max_weight=1.),

'maxret': markowitz_emp.maxret(max_weight=1.),

'maxutil': markowitz_emp.maxutil(gamma=gamma, max_weight=1.)}

emp_portfolios_c = {'minvar': markowitz_emp.minvar(max_weight=max_weight),

'maxret': markowitz_emp.maxret(max_weight=max_weight),

'maxutil': markowitz_emp.maxutil(gamma=gamma, max_weight=max_weight)}

Let us see how the unconstrained portfolios compare with the ground truth.

plot_scatter(title='Ground truth vs empirical estimates: Unconstrained',

true_u=optimal_portfolios_u,

emp_u=emp_portfolios_u)

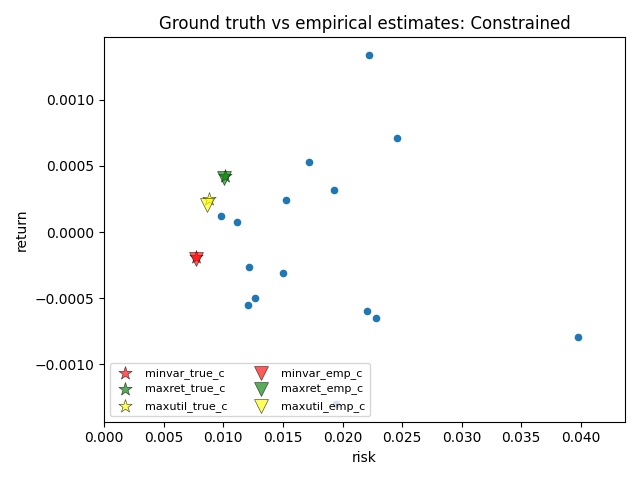

Similarly, let’s look at the constrained version.

plot_scatter(title='Ground truth vs empirical estimates: Constrained',

true_c=optimal_portfolios_c,

emp_c=emp_portfolios_c)

We can see that under this setup, the empirical estimates are yielding close to optimal portfolios.

Implementation and setup of a deepdow network¶

We would like to construct a network that accepts no input features, however, has multiple learnable parameters that determine the result of a forward pass. Namely, we are going to use the NumericalMarkowitz layer. Our goal is to learn a mean return vector, covariance matrix and gamma totally from scratch. Note that this is fundamentally different from using sample estimators as done in the previous section.

class Net(torch.nn.Module, Benchmark):

"""Learn covariance matrix, mean vector and gamma.

One can enforce max weight per asset.

"""

def __init__(self, n_assets, max_weight=1.):

super().__init__()

self.force_symmetric = True

self.matrix = torch.nn.Parameter(torch.eye(n_assets), requires_grad=True)

self.exp_returns = torch.nn.Parameter(torch.zeros(n_assets), requires_grad=True)

self.gamma_sqrt = torch.nn.Parameter(torch.ones(1), requires_grad=True)

self.portfolio_opt_layer = NumericalMarkowitz(n_assets, max_weight=max_weight)

def forward(self, x):

"""Perform forward pass.

Parameters

----------

x : torch.Tensor

Tensor of shape `(n_samples, n_channels, lookback, n_assets)`.

Returns

-------

weights_filled : torch.Tensor

Of shape (n_samples, n_assets) representing the optimal weights as determined by the

convex optimizer.

"""

n = len(x)

cov_sqrt = torch.mm(self.matrix, torch.t(self.matrix)) if self.force_symmetric else self.matrix

weights = self.portfolio_opt_layer(self.exp_returns[None, ...],

cov_sqrt[None, ...],

self.gamma_sqrt,

torch.zeros(1).to(device=x.device, dtype=x.dtype))

weights_filled = torch.repeat_interleave(weights, n, dim=0)

return weights_filled

Let us set some parameters. Note that lookback is irrelevant since our network expects

no features. We need to define it anyway to be able to use deepdow dataloaders.

lookback, gap, horizon = 2, 0, 5 # lookback does not matter in this case, just used for consistency

batch_size = 256

n_epochs = 100 # We employ early stopping

We are ready to create a DeepDow dataset.

X_list, y_list = [], []

for i in range(lookback, n_timesteps - horizon - gap + 1):

X_list.append(returns.values[i - lookback: i, :])

y_list.append(returns.values[i + gap: i + gap + horizon, :])

X = np.stack(X_list, axis=0)[:, None, ...]

y = np.stack(y_list, axis=0)[:, None, ...]

dataset = InRAMDataset(X, y, asset_names=returns.columns)

dataloader = RigidDataLoader(dataset, batch_size=batch_size)

The main feature of deepdow is that it only cares about the final allocation that minimizes

some function of empirical portfolio returns. Unlike with the sample estimators in the previous

sections, one does not need to explicitly model the dynamics of the market and find allocation via

the two step procedure. Below we define empirical counterparts of the convex optimization objectives

(losses). Note that all losses in deepdow have the the lower the better logic.

all_losses = {'minvar': StandardDeviation() ** 2,

'maxret': MeanReturns(),

'maxutil': MeanReturns() + gamma * StandardDeviation() ** 2}

Training and evaluation¶

Now it is time to train!

deep_portfolios_c = {}

deep_portfolios_u = {}

for mode in ['u', 'c']:

for loss_name, loss in all_losses.items():

network = Net(n_assets, max_weight=max_weight if mode == 'c' else 1.)

run = Run(network,

loss,

dataloader,

val_dataloaders={'train': dataloader},

callbacks=[EarlyStoppingCallback('train', 'loss', patience=3)])

run.launch(n_epochs=n_epochs)

# Results

w_pred = network(torch.ones(1, n_assets)).detach().numpy().squeeze() # the input does not matter

if mode == 'c':

deep_portfolios_c[loss_name] = w_pred

else:

deep_portfolios_u[loss_name] = w_pred

Epoch 0: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 0: 17%|#6 | 1/6 [00:00<00:00, 43.03it/s, loss=0.00010]

Epoch 0: 33%|###3 | 2/6 [00:00<00:00, 46.20it/s, loss=0.00008]

Epoch 0: 50%|##### | 3/6 [00:00<00:00, 48.16it/s, loss=0.00008]

Epoch 0: 67%|######6 | 4/6 [00:00<00:00, 49.11it/s, loss=0.00007]

Epoch 0: 83%|########3 | 5/6 [00:00<00:00, 49.63it/s, loss=0.00007]

Epoch 0: 83%|########3 | 5/6 [00:00<00:00, 49.63it/s, loss=0.00007]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 49.63it/s, loss=0.00007]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 49.63it/s, loss=0.00007, train_loss=0.00006]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 49.63it/s, loss=0.00007, train_loss=0.00006]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 27.50it/s, loss=0.00007, train_loss=0.00006]

Epoch 1: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 1: 17%|#6 | 1/6 [00:00<00:00, 46.64it/s, loss=0.00005]

Epoch 1: 33%|###3 | 2/6 [00:00<00:00, 48.42it/s, loss=0.00005]

Epoch 1: 50%|##### | 3/6 [00:00<00:00, 49.14it/s, loss=0.00005]

Epoch 1: 67%|######6 | 4/6 [00:00<00:00, 49.76it/s, loss=0.00005]

Epoch 1: 83%|########3 | 5/6 [00:00<00:00, 50.09it/s, loss=0.00005]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 50.78it/s, loss=0.00005]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 50.78it/s, loss=0.00005]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 50.78it/s, loss=0.00005, train_loss=0.00005]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 50.78it/s, loss=0.00005, train_loss=0.00005]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 27.53it/s, loss=0.00005, train_loss=0.00005]

Epoch 2: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 2: 17%|#6 | 1/6 [00:00<00:00, 45.19it/s, loss=0.00005]

Epoch 2: 33%|###3 | 2/6 [00:00<00:00, 47.88it/s, loss=0.00005]

Epoch 2: 50%|##### | 3/6 [00:00<00:00, 48.72it/s, loss=0.00005]

Epoch 2: 67%|######6 | 4/6 [00:00<00:00, 48.88it/s, loss=0.00005]

Epoch 2: 83%|########3 | 5/6 [00:00<00:00, 48.88it/s, loss=0.00005]

Epoch 2: 83%|########3 | 5/6 [00:00<00:00, 48.88it/s, loss=0.00005]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 48.88it/s, loss=0.00005]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 48.88it/s, loss=0.00005, train_loss=0.00005]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 48.88it/s, loss=0.00005, train_loss=0.00005]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 27.13it/s, loss=0.00005, train_loss=0.00005]

Epoch 3: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 3: 17%|#6 | 1/6 [00:00<00:00, 44.89it/s, loss=0.00005]

Epoch 3: 33%|###3 | 2/6 [00:00<00:00, 47.10it/s, loss=0.00005]

Epoch 3: 50%|##### | 3/6 [00:00<00:00, 48.48it/s, loss=0.00005]

Epoch 3: 67%|######6 | 4/6 [00:00<00:00, 49.22it/s, loss=0.00005]

Epoch 3: 83%|########3 | 5/6 [00:00<00:00, 49.56it/s, loss=0.00005]

Epoch 3: 83%|########3 | 5/6 [00:00<00:00, 49.56it/s, loss=0.00005]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 49.56it/s, loss=0.00005]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 49.56it/s, loss=0.00005, train_loss=0.00005]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 49.56it/s, loss=0.00005, train_loss=0.00005]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 27.80it/s, loss=0.00005, train_loss=0.00005]

Epoch 4: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 4: 17%|#6 | 1/6 [00:00<00:00, 46.93it/s, loss=0.00005]

Epoch 4: 33%|###3 | 2/6 [00:00<00:00, 48.83it/s, loss=0.00005]

Epoch 4: 50%|##### | 3/6 [00:00<00:00, 49.47it/s, loss=0.00005]

Epoch 4: 67%|######6 | 4/6 [00:00<00:00, 49.77it/s, loss=0.00005]

Epoch 4: 83%|########3 | 5/6 [00:00<00:00, 50.09it/s, loss=0.00005]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 50.89it/s, loss=0.00005]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 50.89it/s, loss=0.00005]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 50.89it/s, loss=0.00005, train_loss=0.00005]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 50.89it/s, loss=0.00005, train_loss=0.00005]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 27.84it/s, loss=0.00005, train_loss=0.00005]

Epoch 5: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 5: 17%|#6 | 1/6 [00:00<00:00, 46.36it/s, loss=0.00005]

Epoch 5: 33%|###3 | 2/6 [00:00<00:00, 48.92it/s, loss=0.00005]

Epoch 5: 50%|##### | 3/6 [00:00<00:00, 49.59it/s, loss=0.00005]

Epoch 5: 67%|######6 | 4/6 [00:00<00:00, 50.07it/s, loss=0.00005]

Epoch 5: 83%|########3 | 5/6 [00:00<00:00, 50.23it/s, loss=0.00005]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 50.65it/s, loss=0.00005]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 50.65it/s, loss=0.00005]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 50.65it/s, loss=0.00005, train_loss=0.00005]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 50.65it/s, loss=0.00005, train_loss=0.00005]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 27.55it/s, loss=0.00005, train_loss=0.00005]

Epoch 6: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 6: 17%|#6 | 1/6 [00:00<00:00, 46.61it/s, loss=0.00005]

Epoch 6: 33%|###3 | 2/6 [00:00<00:00, 48.32it/s, loss=0.00005]

Epoch 6: 50%|##### | 3/6 [00:00<00:00, 48.76it/s, loss=0.00005]

Epoch 6: 67%|######6 | 4/6 [00:00<00:00, 49.07it/s, loss=0.00005]

Epoch 6: 83%|########3 | 5/6 [00:00<00:00, 49.45it/s, loss=0.00005]

Epoch 6: 83%|########3 | 5/6 [00:00<00:00, 49.45it/s, loss=0.00005]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 49.45it/s, loss=0.00005]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 49.45it/s, loss=0.00005, train_loss=0.00005]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 49.45it/s, loss=0.00005, train_loss=0.00005]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 27.45it/s, loss=0.00005, train_loss=0.00005]

Epoch 7: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 7: 17%|#6 | 1/6 [00:00<00:00, 46.61it/s, loss=0.00005]

Epoch 7: 33%|###3 | 2/6 [00:00<00:00, 47.95it/s, loss=0.00005]

Epoch 7: 50%|##### | 3/6 [00:00<00:00, 48.07it/s, loss=0.00005]

Epoch 7: 67%|######6 | 4/6 [00:00<00:00, 48.60it/s, loss=0.00005]

Epoch 7: 83%|########3 | 5/6 [00:00<00:00, 49.04it/s, loss=0.00005]

Epoch 7: 83%|########3 | 5/6 [00:00<00:00, 49.04it/s, loss=0.00005]

Epoch 7: 100%|##########| 6/6 [00:00<00:00, 49.04it/s, loss=0.00005]

Epoch 7: 100%|##########| 6/6 [00:00<00:00, 49.04it/s, loss=0.00005, train_loss=0.00005]

Epoch 7: 100%|##########| 6/6 [00:00<00:00, 49.04it/s, loss=0.00005, train_loss=0.00005]

Epoch 7: 100%|##########| 6/6 [00:00<00:00, 27.53it/s, loss=0.00005, train_loss=0.00005]

Epoch 8: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 8: 17%|#6 | 1/6 [00:00<00:00, 46.44it/s, loss=0.00005]

Epoch 8: 33%|###3 | 2/6 [00:00<00:00, 47.27it/s, loss=0.00005]

Epoch 8: 50%|##### | 3/6 [00:00<00:00, 48.19it/s, loss=0.00005]

Epoch 8: 67%|######6 | 4/6 [00:00<00:00, 48.41it/s, loss=0.00005]

Epoch 8: 83%|########3 | 5/6 [00:00<00:00, 48.71it/s, loss=0.00005]

Epoch 8: 83%|########3 | 5/6 [00:00<00:00, 48.71it/s, loss=0.00005]

Epoch 8: 100%|##########| 6/6 [00:00<00:00, 48.71it/s, loss=0.00005]

Epoch 8: 100%|##########| 6/6 [00:00<00:00, 48.71it/s, loss=0.00005, train_loss=0.00005]

Epoch 8: 100%|##########| 6/6 [00:00<00:00, 48.71it/s, loss=0.00005, train_loss=0.00005]

Epoch 8: 100%|##########| 6/6 [00:00<00:00, 27.14it/s, loss=0.00005, train_loss=0.00005]

Epoch 9: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 9: 17%|#6 | 1/6 [00:00<00:00, 44.88it/s, loss=0.00005]

Epoch 9: 33%|###3 | 2/6 [00:00<00:00, 46.95it/s, loss=0.00005]

Epoch 9: 50%|##### | 3/6 [00:00<00:00, 47.97it/s, loss=0.00005]

Epoch 9: 67%|######6 | 4/6 [00:00<00:00, 48.55it/s, loss=0.00005]

Epoch 9: 83%|########3 | 5/6 [00:00<00:00, 48.65it/s, loss=0.00005]

Epoch 9: 83%|########3 | 5/6 [00:00<00:00, 48.65it/s, loss=0.00005]

Epoch 9: 100%|##########| 6/6 [00:00<00:00, 48.65it/s, loss=0.00005]

Epoch 9: 100%|##########| 6/6 [00:00<00:00, 48.65it/s, loss=0.00005, train_loss=0.00005]

Epoch 9: 100%|##########| 6/6 [00:00<00:00, 48.65it/s, loss=0.00005, train_loss=0.00005]

Epoch 9: 100%|##########| 6/6 [00:00<00:00, 27.03it/s, loss=0.00005, train_loss=0.00005]

Epoch 10: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 10: 17%|#6 | 1/6 [00:00<00:00, 46.24it/s, loss=0.00005]

Epoch 10: 33%|###3 | 2/6 [00:00<00:00, 47.99it/s, loss=0.00005]

Epoch 10: 50%|##### | 3/6 [00:00<00:00, 48.58it/s, loss=0.00005]

Epoch 10: 67%|######6 | 4/6 [00:00<00:00, 48.80it/s, loss=0.00005]

Epoch 10: 83%|########3 | 5/6 [00:00<00:00, 48.91it/s, loss=0.00005]

Epoch 10: 83%|########3 | 5/6 [00:00<00:00, 48.91it/s, loss=0.00005]

Epoch 10: 100%|##########| 6/6 [00:00<00:00, 48.91it/s, loss=0.00005]

Epoch 10: 100%|##########| 6/6 [00:00<00:00, 48.91it/s, loss=0.00005, train_loss=0.00005]

Epoch 10: 100%|##########| 6/6 [00:00<00:00, 48.91it/s, loss=0.00005, train_loss=0.00005]

Epoch 10: 100%|##########| 6/6 [00:00<00:00, 27.03it/s, loss=0.00005, train_loss=0.00005]

Epoch 11: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 11: 17%|#6 | 1/6 [00:00<00:00, 7.89it/s]

Epoch 11: 17%|#6 | 1/6 [00:00<00:00, 7.89it/s, loss=0.00005]

Epoch 11: 33%|###3 | 2/6 [00:00<00:00, 7.89it/s, loss=0.00005]

Epoch 11: 50%|##### | 3/6 [00:00<00:00, 7.89it/s, loss=0.00005]

Epoch 11: 67%|######6 | 4/6 [00:00<00:00, 7.89it/s, loss=0.00005]

Epoch 11: 83%|########3 | 5/6 [00:00<00:00, 7.89it/s, loss=0.00005]

Epoch 11: 100%|##########| 6/6 [00:00<00:00, 30.13it/s, loss=0.00005]

Epoch 11: 100%|##########| 6/6 [00:00<00:00, 30.13it/s, loss=0.00005]

Epoch 11: 100%|##########| 6/6 [00:00<00:00, 30.13it/s, loss=0.00005, train_loss=0.00005]

Epoch 11: 100%|##########| 6/6 [00:00<00:00, 30.13it/s, loss=0.00005, train_loss=0.00005]

Epoch 11: 100%|##########| 6/6 [00:00<00:00, 18.40it/s, loss=0.00005, train_loss=0.00005]

Epoch 12: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 12: 17%|#6 | 1/6 [00:00<00:00, 48.24it/s, loss=0.00005]

Epoch 12: 33%|###3 | 2/6 [00:00<00:00, 50.06it/s, loss=0.00005]

Epoch 12: 50%|##### | 3/6 [00:00<00:00, 50.35it/s, loss=0.00005]

Epoch 12: 67%|######6 | 4/6 [00:00<00:00, 50.00it/s, loss=0.00005]

Epoch 12: 83%|########3 | 5/6 [00:00<00:00, 50.05it/s, loss=0.00005]

Epoch 12: 100%|##########| 6/6 [00:00<00:00, 50.57it/s, loss=0.00005]

Epoch 12: 100%|##########| 6/6 [00:00<00:00, 50.57it/s, loss=0.00005]

Epoch 12: 100%|##########| 6/6 [00:00<00:00, 50.57it/s, loss=0.00005, train_loss=0.00005]

Epoch 12: 100%|##########| 6/6 [00:00<00:00, 50.57it/s, loss=0.00005, train_loss=0.00005]

Epoch 12: 100%|##########| 6/6 [00:00<00:00, 27.52it/s, loss=0.00005, train_loss=0.00005]

Epoch 13: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 13: 17%|#6 | 1/6 [00:00<00:00, 46.03it/s, loss=0.00005]

Epoch 13: 33%|###3 | 2/6 [00:00<00:00, 47.95it/s, loss=0.00005]

Epoch 13: 50%|##### | 3/6 [00:00<00:00, 48.79it/s, loss=0.00005]

Epoch 13: 67%|######6 | 4/6 [00:00<00:00, 49.41it/s, loss=0.00005]

Epoch 13: 83%|########3 | 5/6 [00:00<00:00, 49.74it/s, loss=0.00005]

Epoch 13: 83%|########3 | 5/6 [00:00<00:00, 49.74it/s, loss=0.00005]

Epoch 13: 100%|##########| 6/6 [00:00<00:00, 49.74it/s, loss=0.00005]

Epoch 13: 100%|##########| 6/6 [00:00<00:00, 49.74it/s, loss=0.00005, train_loss=0.00005]

Epoch 13: 100%|##########| 6/6 [00:00<00:00, 49.74it/s, loss=0.00005, train_loss=0.00005]

Epoch 13: 100%|##########| 6/6 [00:00<00:00, 27.35it/s, loss=0.00005, train_loss=0.00005]

Training interrupted

Training stopped early because there was no improvement in train_loss for 3 epochs

Epoch 0: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 0: 17%|#6 | 1/6 [00:00<00:00, 48.67it/s, loss=0.00010]

Epoch 0: 33%|###3 | 2/6 [00:00<00:00, 49.59it/s, loss=0.00019]

Epoch 0: 50%|##### | 3/6 [00:00<00:00, 50.45it/s, loss=0.00014]

Epoch 0: 67%|######6 | 4/6 [00:00<00:00, 50.88it/s, loss=0.00005]

Epoch 0: 83%|########3 | 5/6 [00:00<00:00, 51.01it/s, loss=-0.00005]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 51.56it/s, loss=-0.00005]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 51.56it/s, loss=-0.00013]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 51.56it/s, loss=-0.00013, train_loss=-0.00058]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 51.56it/s, loss=-0.00013, train_loss=-0.00058]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 27.96it/s, loss=-0.00013, train_loss=-0.00058]

Epoch 1: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 1: 17%|#6 | 1/6 [00:00<00:00, 47.47it/s, loss=-0.00025]

Epoch 1: 33%|###3 | 2/6 [00:00<00:00, 48.45it/s, loss=-0.00050]

Epoch 1: 50%|##### | 3/6 [00:00<00:00, 48.63it/s, loss=-0.00059]

Epoch 1: 67%|######6 | 4/6 [00:00<00:00, 48.96it/s, loss=-0.00066]

Epoch 1: 83%|########3 | 5/6 [00:00<00:00, 49.40it/s, loss=-0.00066]

Epoch 1: 83%|########3 | 5/6 [00:00<00:00, 49.40it/s, loss=-0.00065]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 49.40it/s, loss=-0.00072]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 49.40it/s, loss=-0.00072, train_loss=-0.00090]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 49.40it/s, loss=-0.00072, train_loss=-0.00090]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 27.00it/s, loss=-0.00072, train_loss=-0.00090]

Epoch 2: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 2: 17%|#6 | 1/6 [00:00<00:00, 47.45it/s, loss=-0.00087]

Epoch 2: 33%|###3 | 2/6 [00:00<00:00, 48.32it/s, loss=-0.00087]

Epoch 2: 50%|##### | 3/6 [00:00<00:00, 48.89it/s, loss=-0.00106]

Epoch 2: 67%|######6 | 4/6 [00:00<00:00, 49.22it/s, loss=-0.00106]

Epoch 2: 83%|########3 | 5/6 [00:00<00:00, 49.32it/s, loss=-0.00106]

Epoch 2: 83%|########3 | 5/6 [00:00<00:00, 49.32it/s, loss=-0.00096]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 49.32it/s, loss=-0.00104]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 49.32it/s, loss=-0.00104, train_loss=-0.00122]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 49.32it/s, loss=-0.00104, train_loss=-0.00122]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 27.15it/s, loss=-0.00104, train_loss=-0.00122]

Epoch 3: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 3: 17%|#6 | 1/6 [00:00<00:00, 46.43it/s, loss=-0.00105]

Epoch 3: 33%|###3 | 2/6 [00:00<00:00, 47.53it/s, loss=-0.00111]

Epoch 3: 50%|##### | 3/6 [00:00<00:00, 48.31it/s, loss=-0.00112]

Epoch 3: 67%|######6 | 4/6 [00:00<00:00, 48.52it/s, loss=-0.00113]

Epoch 3: 83%|########3 | 5/6 [00:00<00:00, 48.65it/s, loss=-0.00113]

Epoch 3: 83%|########3 | 5/6 [00:00<00:00, 48.65it/s, loss=-0.00134]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 48.65it/s, loss=-0.00137]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 48.65it/s, loss=-0.00137, train_loss=-0.00162]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 48.65it/s, loss=-0.00137, train_loss=-0.00162]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 26.92it/s, loss=-0.00137, train_loss=-0.00162]

Epoch 4: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 4: 17%|#6 | 1/6 [00:00<00:00, 46.56it/s, loss=-0.00167]

Epoch 4: 33%|###3 | 2/6 [00:00<00:00, 47.52it/s, loss=-0.00200]

Epoch 4: 50%|##### | 3/6 [00:00<00:00, 48.55it/s, loss=-0.00185]

Epoch 4: 67%|######6 | 4/6 [00:00<00:00, 48.78it/s, loss=-0.00169]

Epoch 4: 83%|########3 | 5/6 [00:00<00:00, 48.62it/s, loss=-0.00169]

Epoch 4: 83%|########3 | 5/6 [00:00<00:00, 48.62it/s, loss=-0.00167]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 48.62it/s, loss=-0.00180]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 48.62it/s, loss=-0.00180, train_loss=-0.00191]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 48.62it/s, loss=-0.00180, train_loss=-0.00191]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 26.60it/s, loss=-0.00180, train_loss=-0.00191]

Epoch 5: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 5: 17%|#6 | 1/6 [00:00<00:00, 45.38it/s, loss=-0.00259]

Epoch 5: 33%|###3 | 2/6 [00:00<00:00, 47.36it/s, loss=-0.00136]

Epoch 5: 50%|##### | 3/6 [00:00<00:00, 48.58it/s, loss=-0.00185]

Epoch 5: 67%|######6 | 4/6 [00:00<00:00, 48.37it/s, loss=-0.00188]

Epoch 5: 83%|########3 | 5/6 [00:00<00:00, 48.50it/s, loss=-0.00188]

Epoch 5: 83%|########3 | 5/6 [00:00<00:00, 48.50it/s, loss=-0.00191]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 48.50it/s, loss=-0.00191]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 48.50it/s, loss=-0.00191, train_loss=-0.00191]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 48.50it/s, loss=-0.00191, train_loss=-0.00191]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 26.42it/s, loss=-0.00191, train_loss=-0.00191]

Epoch 6: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 6: 17%|#6 | 1/6 [00:00<00:00, 44.91it/s, loss=-0.00160]

Epoch 6: 33%|###3 | 2/6 [00:00<00:00, 46.26it/s, loss=-0.00210]

Epoch 6: 50%|##### | 3/6 [00:00<00:00, 47.27it/s, loss=-0.00182]

Epoch 6: 67%|######6 | 4/6 [00:00<00:00, 47.63it/s, loss=-0.00168]

Epoch 6: 83%|########3 | 5/6 [00:00<00:00, 48.23it/s, loss=-0.00168]

Epoch 6: 83%|########3 | 5/6 [00:00<00:00, 48.23it/s, loss=-0.00195]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 48.23it/s, loss=-0.00190]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 48.23it/s, loss=-0.00190, train_loss=-0.00191]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 48.23it/s, loss=-0.00190, train_loss=-0.00191]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 26.87it/s, loss=-0.00190, train_loss=-0.00191]

Epoch 7: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 7: 17%|#6 | 1/6 [00:00<00:00, 47.64it/s, loss=-0.00205]

Epoch 7: 33%|###3 | 2/6 [00:00<00:00, 49.24it/s, loss=-0.00126]

Epoch 7: 50%|##### | 3/6 [00:00<00:00, 49.75it/s, loss=-0.00128]

Epoch 7: 67%|######6 | 4/6 [00:00<00:00, 49.88it/s, loss=-0.00143]

Epoch 7: 83%|########3 | 5/6 [00:00<00:00, 49.90it/s, loss=-0.00143]

Epoch 7: 83%|########3 | 5/6 [00:00<00:00, 49.90it/s, loss=-0.00180]

Epoch 7: 100%|##########| 6/6 [00:00<00:00, 49.90it/s, loss=-0.00193]

Epoch 7: 100%|##########| 6/6 [00:00<00:00, 49.90it/s, loss=-0.00193, train_loss=-0.00191]

Epoch 7: 100%|##########| 6/6 [00:00<00:00, 49.90it/s, loss=-0.00193, train_loss=-0.00191]

Epoch 7: 100%|##########| 6/6 [00:00<00:00, 27.20it/s, loss=-0.00193, train_loss=-0.00191]

Epoch 8: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 8: 17%|#6 | 1/6 [00:00<00:00, 44.83it/s, loss=-0.00271]

Epoch 8: 33%|###3 | 2/6 [00:00<00:00, 47.16it/s, loss=-0.00179]

Epoch 8: 50%|##### | 3/6 [00:00<00:00, 48.20it/s, loss=-0.00171]

Epoch 8: 67%|######6 | 4/6 [00:00<00:00, 48.77it/s, loss=-0.00190]

Epoch 8: 83%|########3 | 5/6 [00:00<00:00, 49.14it/s, loss=-0.00190]

Epoch 8: 83%|########3 | 5/6 [00:00<00:00, 49.14it/s, loss=-0.00191]

Epoch 8: 100%|##########| 6/6 [00:00<00:00, 49.14it/s, loss=-0.00191]

Epoch 8: 100%|##########| 6/6 [00:00<00:00, 49.14it/s, loss=-0.00191, train_loss=-0.00191]

Epoch 8: 100%|##########| 6/6 [00:00<00:00, 49.14it/s, loss=-0.00191, train_loss=-0.00191]

Epoch 8: 100%|##########| 6/6 [00:00<00:00, 27.34it/s, loss=-0.00191, train_loss=-0.00191]

Epoch 9: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 9: 17%|#6 | 1/6 [00:00<00:00, 47.14it/s, loss=-0.00167]

Epoch 9: 33%|###3 | 2/6 [00:00<00:00, 48.89it/s, loss=-0.00175]

Epoch 9: 50%|##### | 3/6 [00:00<00:00, 49.67it/s, loss=-0.00157]

Epoch 9: 67%|######6 | 4/6 [00:00<00:00, 49.96it/s, loss=-0.00176]

Epoch 9: 83%|########3 | 5/6 [00:00<00:00, 50.09it/s, loss=-0.00185]

Epoch 9: 100%|##########| 6/6 [00:00<00:00, 50.67it/s, loss=-0.00185]

Epoch 9: 100%|##########| 6/6 [00:00<00:00, 50.67it/s, loss=-0.00192]

Epoch 9: 100%|##########| 6/6 [00:00<00:00, 50.67it/s, loss=-0.00192, train_loss=-0.00191]

Epoch 9: 100%|##########| 6/6 [00:00<00:00, 50.67it/s, loss=-0.00192, train_loss=-0.00191]

Epoch 9: 100%|##########| 6/6 [00:00<00:00, 27.50it/s, loss=-0.00192, train_loss=-0.00191]

Epoch 10: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 10: 17%|#6 | 1/6 [00:00<00:00, 42.32it/s, loss=-0.00067]

Epoch 10: 33%|###3 | 2/6 [00:00<00:00, 46.32it/s, loss=-0.00157]

Epoch 10: 50%|##### | 3/6 [00:00<00:00, 47.88it/s, loss=-0.00204]

Epoch 10: 67%|######6 | 4/6 [00:00<00:00, 48.40it/s, loss=-0.00214]

Epoch 10: 83%|########3 | 5/6 [00:00<00:00, 48.91it/s, loss=-0.00214]

Epoch 10: 83%|########3 | 5/6 [00:00<00:00, 48.91it/s, loss=-0.00185]

Epoch 10: 100%|##########| 6/6 [00:00<00:00, 48.91it/s, loss=-0.00192]

Epoch 10: 100%|##########| 6/6 [00:00<00:00, 48.91it/s, loss=-0.00192, train_loss=-0.00191]

Epoch 10: 100%|##########| 6/6 [00:00<00:00, 48.91it/s, loss=-0.00192, train_loss=-0.00191]

Epoch 10: 100%|##########| 6/6 [00:00<00:00, 26.91it/s, loss=-0.00192, train_loss=-0.00191]

Training interrupted

Training stopped early because there was no improvement in train_loss for 3 epochs

Epoch 0: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 0: 17%|#6 | 1/6 [00:00<00:00, 43.20it/s, loss=0.00191]

Epoch 0: 33%|###3 | 2/6 [00:00<00:00, 45.25it/s, loss=0.00152]

Epoch 0: 50%|##### | 3/6 [00:00<00:00, 46.44it/s, loss=0.00113]

Epoch 0: 67%|######6 | 4/6 [00:00<00:00, 47.06it/s, loss=0.00117]

Epoch 0: 83%|########3 | 5/6 [00:00<00:00, 47.30it/s, loss=0.00117]

Epoch 0: 83%|########3 | 5/6 [00:00<00:00, 47.30it/s, loss=0.00101]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 47.30it/s, loss=0.00087]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 47.30it/s, loss=0.00087, train_loss=0.00049]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 47.30it/s, loss=0.00087, train_loss=0.00049]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 26.36it/s, loss=0.00087, train_loss=0.00049]

Epoch 1: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 1: 17%|#6 | 1/6 [00:00<00:00, 44.09it/s, loss=0.00061]

Epoch 1: 33%|###3 | 2/6 [00:00<00:00, 46.18it/s, loss=0.00038]

Epoch 1: 50%|##### | 3/6 [00:00<00:00, 45.70it/s, loss=0.00042]

Epoch 1: 67%|######6 | 4/6 [00:00<00:00, 45.28it/s, loss=0.00029]

Epoch 1: 83%|########3 | 5/6 [00:00<00:00, 45.89it/s, loss=0.00029]

Epoch 1: 83%|########3 | 5/6 [00:00<00:00, 45.89it/s, loss=0.00039]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 45.89it/s, loss=0.00041]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 45.89it/s, loss=0.00041, train_loss=0.00030]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 45.89it/s, loss=0.00041, train_loss=0.00030]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 26.02it/s, loss=0.00041, train_loss=0.00030]

Epoch 2: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 2: 17%|#6 | 1/6 [00:00<00:00, 44.67it/s, loss=0.00030]

Epoch 2: 33%|###3 | 2/6 [00:00<00:00, 45.57it/s, loss=0.00021]

Epoch 2: 50%|##### | 3/6 [00:00<00:00, 46.29it/s, loss=0.00023]

Epoch 2: 67%|######6 | 4/6 [00:00<00:00, 46.68it/s, loss=0.00025]

Epoch 2: 83%|########3 | 5/6 [00:00<00:00, 46.66it/s, loss=0.00025]

Epoch 2: 83%|########3 | 5/6 [00:00<00:00, 46.66it/s, loss=0.00018]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 46.66it/s, loss=0.00030]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 46.66it/s, loss=0.00030, train_loss=0.00022]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 46.66it/s, loss=0.00030, train_loss=0.00022]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 25.97it/s, loss=0.00030, train_loss=0.00022]

Epoch 3: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 3: 17%|#6 | 1/6 [00:00<00:00, 43.48it/s, loss=0.00065]

Epoch 3: 33%|###3 | 2/6 [00:00<00:00, 41.37it/s, loss=0.00039]

Epoch 3: 50%|##### | 3/6 [00:00<00:00, 42.87it/s, loss=0.00033]

Epoch 3: 67%|######6 | 4/6 [00:00<00:00, 43.99it/s, loss=0.00032]

Epoch 3: 83%|########3 | 5/6 [00:00<00:00, 44.59it/s, loss=0.00032]

Epoch 3: 83%|########3 | 5/6 [00:00<00:00, 44.59it/s, loss=0.00024]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 44.59it/s, loss=0.00019]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 44.59it/s, loss=0.00019, train_loss=0.00018]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 44.59it/s, loss=0.00019, train_loss=0.00018]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 25.63it/s, loss=0.00019, train_loss=0.00018]

Epoch 4: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 4: 17%|#6 | 1/6 [00:00<00:00, 44.34it/s, loss=0.00041]

Epoch 4: 33%|###3 | 2/6 [00:00<00:00, 46.13it/s, loss=0.00031]

Epoch 4: 50%|##### | 3/6 [00:00<00:00, 46.48it/s, loss=0.00031]

Epoch 4: 67%|######6 | 4/6 [00:00<00:00, 46.66it/s, loss=0.00030]

Epoch 4: 83%|########3 | 5/6 [00:00<00:00, 46.80it/s, loss=0.00030]

Epoch 4: 83%|########3 | 5/6 [00:00<00:00, 46.80it/s, loss=0.00025]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 46.80it/s, loss=0.00016]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 46.80it/s, loss=0.00016, train_loss=0.00015]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 46.80it/s, loss=0.00016, train_loss=0.00015]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 26.23it/s, loss=0.00016, train_loss=0.00015]

Epoch 5: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 5: 17%|#6 | 1/6 [00:00<00:00, 42.99it/s, loss=0.00001]

Epoch 5: 33%|###3 | 2/6 [00:00<00:00, 44.30it/s, loss=-0.00012]

Epoch 5: 50%|##### | 3/6 [00:00<00:00, 44.81it/s, loss=0.00006]

Epoch 5: 67%|######6 | 4/6 [00:00<00:00, 45.16it/s, loss=0.00017]

Epoch 5: 83%|########3 | 5/6 [00:00<00:00, 45.60it/s, loss=0.00017]

Epoch 5: 83%|########3 | 5/6 [00:00<00:00, 45.60it/s, loss=0.00015]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 45.60it/s, loss=0.00015]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 45.60it/s, loss=0.00015, train_loss=0.00015]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 45.60it/s, loss=0.00015, train_loss=0.00015]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 25.79it/s, loss=0.00015, train_loss=0.00015]

Epoch 6: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 6: 17%|#6 | 1/6 [00:00<00:00, 44.95it/s, loss=0.00023]

Epoch 6: 33%|###3 | 2/6 [00:00<00:00, 45.64it/s, loss=0.00055]

Epoch 6: 50%|##### | 3/6 [00:00<00:00, 45.98it/s, loss=0.00029]

Epoch 6: 67%|######6 | 4/6 [00:00<00:00, 46.20it/s, loss=0.00016]

Epoch 6: 83%|########3 | 5/6 [00:00<00:00, 46.07it/s, loss=0.00016]

Epoch 6: 83%|########3 | 5/6 [00:00<00:00, 46.07it/s, loss=0.00014]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 46.07it/s, loss=0.00015]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 46.07it/s, loss=0.00015, train_loss=0.00015]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 46.07it/s, loss=0.00015, train_loss=0.00015]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 25.93it/s, loss=0.00015, train_loss=0.00015]

Epoch 7: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 7: 17%|#6 | 1/6 [00:00<00:00, 43.77it/s, loss=0.00013]

Epoch 7: 33%|###3 | 2/6 [00:00<00:00, 45.73it/s, loss=0.00028]

Epoch 7: 50%|##### | 3/6 [00:00<00:00, 45.65it/s, loss=0.00019]

Epoch 7: 67%|######6 | 4/6 [00:00<00:00, 45.88it/s, loss=0.00025]

Epoch 7: 83%|########3 | 5/6 [00:00<00:00, 45.95it/s, loss=0.00025]

Epoch 7: 83%|########3 | 5/6 [00:00<00:00, 45.95it/s, loss=0.00022]

Epoch 7: 100%|##########| 6/6 [00:00<00:00, 45.95it/s, loss=0.00014]

Epoch 7: 100%|##########| 6/6 [00:00<00:00, 45.95it/s, loss=0.00014, train_loss=0.00015]

Epoch 7: 100%|##########| 6/6 [00:00<00:00, 45.95it/s, loss=0.00014, train_loss=0.00015]

Epoch 7: 100%|##########| 6/6 [00:00<00:00, 25.81it/s, loss=0.00014, train_loss=0.00015]

Epoch 8: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 8: 17%|#6 | 1/6 [00:00<00:00, 43.17it/s, loss=0.00038]

Epoch 8: 33%|###3 | 2/6 [00:00<00:00, 44.65it/s, loss=0.00031]

Epoch 8: 50%|##### | 3/6 [00:00<00:00, 45.30it/s, loss=0.00027]

Epoch 8: 67%|######6 | 4/6 [00:00<00:00, 45.86it/s, loss=0.00029]

Epoch 8: 83%|########3 | 5/6 [00:00<00:00, 45.72it/s, loss=0.00029]

Epoch 8: 83%|########3 | 5/6 [00:00<00:00, 45.72it/s, loss=0.00019]

Epoch 8: 100%|##########| 6/6 [00:00<00:00, 45.72it/s, loss=0.00014]

Epoch 8: 100%|##########| 6/6 [00:00<00:00, 45.72it/s, loss=0.00014, train_loss=0.00015]

Epoch 8: 100%|##########| 6/6 [00:00<00:00, 45.72it/s, loss=0.00014, train_loss=0.00015]

Epoch 8: 100%|##########| 6/6 [00:00<00:00, 25.96it/s, loss=0.00014, train_loss=0.00015]

Training interrupted

Training stopped early because there was no improvement in train_loss for 3 epochs

Epoch 0: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 0: 17%|#6 | 1/6 [00:00<00:00, 48.60it/s, loss=0.00009]

Epoch 0: 33%|###3 | 2/6 [00:00<00:00, 50.42it/s, loss=0.00008]

Epoch 0: 50%|##### | 3/6 [00:00<00:00, 50.86it/s, loss=0.00008]

Epoch 0: 67%|######6 | 4/6 [00:00<00:00, 51.16it/s, loss=0.00007]

Epoch 0: 83%|########3 | 5/6 [00:00<00:00, 51.29it/s, loss=0.00007]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 51.80it/s, loss=0.00007]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 51.80it/s, loss=0.00007]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 51.80it/s, loss=0.00007, train_loss=0.00006]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 51.80it/s, loss=0.00007, train_loss=0.00006]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 27.92it/s, loss=0.00007, train_loss=0.00006]

Epoch 1: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 1: 17%|#6 | 1/6 [00:00<00:00, 46.83it/s, loss=0.00006]

Epoch 1: 33%|###3 | 2/6 [00:00<00:00, 48.84it/s, loss=0.00006]

Epoch 1: 50%|##### | 3/6 [00:00<00:00, 49.83it/s, loss=0.00006]

Epoch 1: 67%|######6 | 4/6 [00:00<00:00, 50.09it/s, loss=0.00006]

Epoch 1: 83%|########3 | 5/6 [00:00<00:00, 49.96it/s, loss=0.00006]

Epoch 1: 83%|########3 | 5/6 [00:00<00:00, 49.96it/s, loss=0.00006]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 49.96it/s, loss=0.00006]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 49.96it/s, loss=0.00006, train_loss=0.00006]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 49.96it/s, loss=0.00006, train_loss=0.00006]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 27.26it/s, loss=0.00006, train_loss=0.00006]

Epoch 2: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 2: 17%|#6 | 1/6 [00:00<00:00, 47.33it/s, loss=0.00006]

Epoch 2: 33%|###3 | 2/6 [00:00<00:00, 48.20it/s, loss=0.00006]

Epoch 2: 50%|##### | 3/6 [00:00<00:00, 49.05it/s, loss=0.00006]

Epoch 2: 67%|######6 | 4/6 [00:00<00:00, 49.51it/s, loss=0.00006]

Epoch 2: 83%|########3 | 5/6 [00:00<00:00, 49.66it/s, loss=0.00006]

Epoch 2: 83%|########3 | 5/6 [00:00<00:00, 49.66it/s, loss=0.00006]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 49.66it/s, loss=0.00006]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 49.66it/s, loss=0.00006, train_loss=0.00006]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 49.66it/s, loss=0.00006, train_loss=0.00006]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 27.20it/s, loss=0.00006, train_loss=0.00006]

Epoch 3: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 3: 17%|#6 | 1/6 [00:00<00:00, 46.79it/s, loss=0.00006]

Epoch 3: 33%|###3 | 2/6 [00:00<00:00, 48.28it/s, loss=0.00006]

Epoch 3: 50%|##### | 3/6 [00:00<00:00, 48.96it/s, loss=0.00006]

Epoch 3: 67%|######6 | 4/6 [00:00<00:00, 49.36it/s, loss=0.00006]

Epoch 3: 83%|########3 | 5/6 [00:00<00:00, 49.66it/s, loss=0.00006]

Epoch 3: 83%|########3 | 5/6 [00:00<00:00, 49.66it/s, loss=0.00006]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 49.66it/s, loss=0.00006]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 49.66it/s, loss=0.00006, train_loss=0.00006]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 49.66it/s, loss=0.00006, train_loss=0.00006]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 27.18it/s, loss=0.00006, train_loss=0.00006]

Epoch 4: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 4: 17%|#6 | 1/6 [00:00<00:00, 8.41it/s]

Epoch 4: 17%|#6 | 1/6 [00:00<00:00, 8.41it/s, loss=0.00006]

Epoch 4: 33%|###3 | 2/6 [00:00<00:00, 8.41it/s, loss=0.00006]

Epoch 4: 50%|##### | 3/6 [00:00<00:00, 8.41it/s, loss=0.00006]

Epoch 4: 67%|######6 | 4/6 [00:00<00:00, 8.41it/s, loss=0.00006]

Epoch 4: 83%|########3 | 5/6 [00:00<00:00, 8.41it/s, loss=0.00006]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 8.41it/s, loss=0.00006]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 8.41it/s, loss=0.00006, train_loss=0.00006]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 8.41it/s, loss=0.00006, train_loss=0.00006]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 18.82it/s, loss=0.00006, train_loss=0.00006]

Epoch 5: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 5: 17%|#6 | 1/6 [00:00<00:00, 46.73it/s, loss=0.00006]

Epoch 5: 33%|###3 | 2/6 [00:00<00:00, 48.52it/s, loss=0.00006]

Epoch 5: 50%|##### | 3/6 [00:00<00:00, 49.30it/s, loss=0.00006]

Epoch 5: 67%|######6 | 4/6 [00:00<00:00, 49.83it/s, loss=0.00006]

Epoch 5: 83%|########3 | 5/6 [00:00<00:00, 50.07it/s, loss=0.00006]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 50.53it/s, loss=0.00006]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 50.53it/s, loss=0.00006]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 50.53it/s, loss=0.00006, train_loss=0.00006]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 50.53it/s, loss=0.00006, train_loss=0.00006]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 27.30it/s, loss=0.00006, train_loss=0.00006]

Epoch 6: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 6: 17%|#6 | 1/6 [00:00<00:00, 45.61it/s, loss=0.00006]

Epoch 6: 33%|###3 | 2/6 [00:00<00:00, 47.74it/s, loss=0.00006]

Epoch 6: 50%|##### | 3/6 [00:00<00:00, 48.68it/s, loss=0.00006]

Epoch 6: 67%|######6 | 4/6 [00:00<00:00, 48.95it/s, loss=0.00006]

Epoch 6: 83%|########3 | 5/6 [00:00<00:00, 49.07it/s, loss=0.00006]

Epoch 6: 83%|########3 | 5/6 [00:00<00:00, 49.07it/s, loss=0.00006]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 49.07it/s, loss=0.00006]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 49.07it/s, loss=0.00006, train_loss=0.00006]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 49.07it/s, loss=0.00006, train_loss=0.00006]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 27.32it/s, loss=0.00006, train_loss=0.00006]

Training interrupted

Training stopped early because there was no improvement in train_loss for 3 epochs

Epoch 0: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 0: 17%|#6 | 1/6 [00:00<00:00, 49.07it/s, loss=0.00017]

Epoch 0: 33%|###3 | 2/6 [00:00<00:00, 49.78it/s, loss=0.00013]

Epoch 0: 50%|##### | 3/6 [00:00<00:00, 50.83it/s, loss=-0.00011]

Epoch 0: 67%|######6 | 4/6 [00:00<00:00, 51.29it/s, loss=-0.00013]

Epoch 0: 83%|########3 | 5/6 [00:00<00:00, 51.47it/s, loss=-0.00006]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 52.08it/s, loss=-0.00006]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 52.08it/s, loss=-0.00004]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 52.08it/s, loss=-0.00004, train_loss=-0.00037]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 52.08it/s, loss=-0.00004, train_loss=-0.00037]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 27.97it/s, loss=-0.00004, train_loss=-0.00037]

Epoch 1: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 1: 17%|#6 | 1/6 [00:00<00:00, 47.45it/s, loss=-0.00060]

Epoch 1: 33%|###3 | 2/6 [00:00<00:00, 49.00it/s, loss=-0.00052]

Epoch 1: 50%|##### | 3/6 [00:00<00:00, 49.73it/s, loss=-0.00043]

Epoch 1: 67%|######6 | 4/6 [00:00<00:00, 49.78it/s, loss=-0.00035]

Epoch 1: 83%|########3 | 5/6 [00:00<00:00, 49.86it/s, loss=-0.00035]

Epoch 1: 83%|########3 | 5/6 [00:00<00:00, 49.86it/s, loss=-0.00035]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 49.86it/s, loss=-0.00037]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 49.86it/s, loss=-0.00037, train_loss=-0.00037]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 49.86it/s, loss=-0.00037, train_loss=-0.00037]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 27.01it/s, loss=-0.00037, train_loss=-0.00037]

Epoch 2: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 2: 17%|#6 | 1/6 [00:00<00:00, 47.45it/s, loss=-0.00092]

Epoch 2: 33%|###3 | 2/6 [00:00<00:00, 48.96it/s, loss=-0.00029]

Epoch 2: 50%|##### | 3/6 [00:00<00:00, 49.49it/s, loss=-0.00031]

Epoch 2: 67%|######6 | 4/6 [00:00<00:00, 49.84it/s, loss=-0.00032]

Epoch 2: 83%|########3 | 5/6 [00:00<00:00, 50.08it/s, loss=-0.00038]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 50.64it/s, loss=-0.00038]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 50.64it/s, loss=-0.00037]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 50.64it/s, loss=-0.00037, train_loss=-0.00037]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 50.64it/s, loss=-0.00037, train_loss=-0.00037]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 27.28it/s, loss=-0.00037, train_loss=-0.00037]

Epoch 3: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 3: 17%|#6 | 1/6 [00:00<00:00, 45.29it/s, loss=-0.00055]

Epoch 3: 33%|###3 | 2/6 [00:00<00:00, 47.48it/s, loss=-0.00049]

Epoch 3: 50%|##### | 3/6 [00:00<00:00, 48.19it/s, loss=-0.00049]

Epoch 3: 67%|######6 | 4/6 [00:00<00:00, 48.66it/s, loss=-0.00048]

Epoch 3: 83%|########3 | 5/6 [00:00<00:00, 48.70it/s, loss=-0.00048]

Epoch 3: 83%|########3 | 5/6 [00:00<00:00, 48.70it/s, loss=-0.00045]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 48.70it/s, loss=-0.00036]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 48.70it/s, loss=-0.00036, train_loss=-0.00037]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 48.70it/s, loss=-0.00036, train_loss=-0.00037]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 26.75it/s, loss=-0.00036, train_loss=-0.00037]

Training interrupted

Training stopped early because there was no improvement in train_loss for 3 epochs

Epoch 0: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 0: 17%|#6 | 1/6 [00:00<00:00, 44.53it/s, loss=0.00135]

Epoch 0: 33%|###3 | 2/6 [00:00<00:00, 46.21it/s, loss=0.00115]

Epoch 0: 50%|##### | 3/6 [00:00<00:00, 46.78it/s, loss=0.00095]

Epoch 0: 67%|######6 | 4/6 [00:00<00:00, 46.88it/s, loss=0.00093]

Epoch 0: 83%|########3 | 5/6 [00:00<00:00, 47.03it/s, loss=0.00093]

Epoch 0: 83%|########3 | 5/6 [00:00<00:00, 47.03it/s, loss=0.00089]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 47.03it/s, loss=0.00089]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 47.03it/s, loss=0.00089, train_loss=0.00059]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 47.03it/s, loss=0.00089, train_loss=0.00059]

Epoch 0: 100%|##########| 6/6 [00:00<00:00, 26.38it/s, loss=0.00089, train_loss=0.00059]

Epoch 1: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 1: 17%|#6 | 1/6 [00:00<00:00, 44.32it/s, loss=0.00085]

Epoch 1: 33%|###3 | 2/6 [00:00<00:00, 45.66it/s, loss=0.00075]

Epoch 1: 50%|##### | 3/6 [00:00<00:00, 46.43it/s, loss=0.00059]

Epoch 1: 67%|######6 | 4/6 [00:00<00:00, 46.75it/s, loss=0.00051]

Epoch 1: 83%|########3 | 5/6 [00:00<00:00, 46.90it/s, loss=0.00051]

Epoch 1: 83%|########3 | 5/6 [00:00<00:00, 46.90it/s, loss=0.00049]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 46.90it/s, loss=0.00061]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 46.90it/s, loss=0.00061, train_loss=0.00059]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 46.90it/s, loss=0.00061, train_loss=0.00059]

Epoch 1: 100%|##########| 6/6 [00:00<00:00, 26.30it/s, loss=0.00061, train_loss=0.00059]

Epoch 2: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 2: 17%|#6 | 1/6 [00:00<00:00, 43.23it/s, loss=0.00067]

Epoch 2: 33%|###3 | 2/6 [00:00<00:00, 44.47it/s, loss=0.00060]

Epoch 2: 50%|##### | 3/6 [00:00<00:00, 45.61it/s, loss=0.00061]

Epoch 2: 67%|######6 | 4/6 [00:00<00:00, 46.18it/s, loss=0.00060]

Epoch 2: 83%|########3 | 5/6 [00:00<00:00, 46.37it/s, loss=0.00060]

Epoch 2: 83%|########3 | 5/6 [00:00<00:00, 46.37it/s, loss=0.00059]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 46.37it/s, loss=0.00060]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 46.37it/s, loss=0.00060, train_loss=0.00059]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 46.37it/s, loss=0.00060, train_loss=0.00059]

Epoch 2: 100%|##########| 6/6 [00:00<00:00, 26.07it/s, loss=0.00060, train_loss=0.00059]

Epoch 3: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 3: 17%|#6 | 1/6 [00:00<00:00, 44.03it/s, loss=0.00074]

Epoch 3: 33%|###3 | 2/6 [00:00<00:00, 45.60it/s, loss=0.00064]

Epoch 3: 50%|##### | 3/6 [00:00<00:00, 46.06it/s, loss=0.00046]

Epoch 3: 67%|######6 | 4/6 [00:00<00:00, 46.49it/s, loss=0.00058]

Epoch 3: 83%|########3 | 5/6 [00:00<00:00, 46.78it/s, loss=0.00058]

Epoch 3: 83%|########3 | 5/6 [00:00<00:00, 46.78it/s, loss=0.00062]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 46.78it/s, loss=0.00059]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 46.78it/s, loss=0.00059, train_loss=0.00059]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 46.78it/s, loss=0.00059, train_loss=0.00059]

Epoch 3: 100%|##########| 6/6 [00:00<00:00, 26.17it/s, loss=0.00059, train_loss=0.00059]

Epoch 4: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 4: 17%|#6 | 1/6 [00:00<00:00, 44.29it/s, loss=0.00030]

Epoch 4: 33%|###3 | 2/6 [00:00<00:00, 45.38it/s, loss=0.00075]

Epoch 4: 50%|##### | 3/6 [00:00<00:00, 45.67it/s, loss=0.00055]

Epoch 4: 67%|######6 | 4/6 [00:00<00:00, 45.84it/s, loss=0.00055]

Epoch 4: 83%|########3 | 5/6 [00:00<00:00, 46.11it/s, loss=0.00055]

Epoch 4: 83%|########3 | 5/6 [00:00<00:00, 46.11it/s, loss=0.00063]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 46.11it/s, loss=0.00058]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 46.11it/s, loss=0.00058, train_loss=0.00059]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 46.11it/s, loss=0.00058, train_loss=0.00059]

Epoch 4: 100%|##########| 6/6 [00:00<00:00, 26.00it/s, loss=0.00058, train_loss=0.00059]

Epoch 5: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 5: 17%|#6 | 1/6 [00:00<00:00, 42.50it/s, loss=0.00032]

Epoch 5: 33%|###3 | 2/6 [00:00<00:00, 44.00it/s, loss=0.00031]

Epoch 5: 50%|##### | 3/6 [00:00<00:00, 45.07it/s, loss=0.00060]

Epoch 5: 67%|######6 | 4/6 [00:00<00:00, 45.49it/s, loss=0.00071]

Epoch 5: 83%|########3 | 5/6 [00:00<00:00, 45.88it/s, loss=0.00071]

Epoch 5: 83%|########3 | 5/6 [00:00<00:00, 45.88it/s, loss=0.00065]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 45.88it/s, loss=0.00059]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 45.88it/s, loss=0.00059, train_loss=0.00059]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 45.88it/s, loss=0.00059, train_loss=0.00059]

Epoch 5: 100%|##########| 6/6 [00:00<00:00, 26.10it/s, loss=0.00059, train_loss=0.00059]

Epoch 6: 0%| | 0/6 [00:00<?, ?it/s]

Epoch 6: 17%|#6 | 1/6 [00:00<00:00, 43.67it/s, loss=0.00024]

Epoch 6: 33%|###3 | 2/6 [00:00<00:00, 45.32it/s, loss=0.00043]

Epoch 6: 50%|##### | 3/6 [00:00<00:00, 45.97it/s, loss=0.00054]

Epoch 6: 67%|######6 | 4/6 [00:00<00:00, 45.68it/s, loss=0.00060]

Epoch 6: 83%|########3 | 5/6 [00:00<00:00, 45.64it/s, loss=0.00060]

Epoch 6: 83%|########3 | 5/6 [00:00<00:00, 45.64it/s, loss=0.00059]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 45.64it/s, loss=0.00060]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 45.64it/s, loss=0.00060, train_loss=0.00060]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 45.64it/s, loss=0.00060, train_loss=0.00060]

Epoch 6: 100%|##########| 6/6 [00:00<00:00, 25.80it/s, loss=0.00060, train_loss=0.00060]

Training interrupted

Training stopped early because there was no improvement in train_loss for 3 epochs

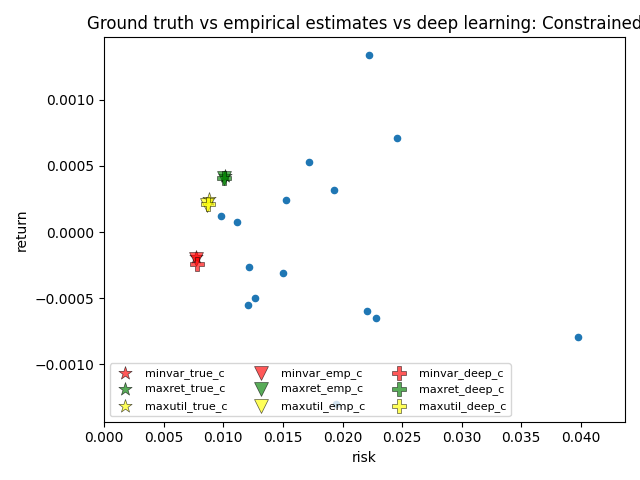

Unconstrained case:

plot_scatter(title='Ground truth vs empirical estimates vs deep learning: Unconstrained',

true_u=optimal_portfolios_u,

emp_u=emp_portfolios_u,

deep_u=deep_portfolios_u)

Constrained case:

plot_scatter(title='Ground truth vs empirical estimates vs deep learning: Constrained',

true_c=optimal_portfolios_c,

emp_c=emp_portfolios_c,

deep_c=deep_portfolios_c)

Total running time of the script: ( 0 minutes 20.439 seconds)